World in Conflict

Publisher: SierraWe regard World in Conflict as one of the best real-time strategy games we've ever played. It's based on Microsoft's DirectX 10 API and, in collaboration with Nvidia's The Way It's Meant To Be Played developer support team, it incorporates some DirectX 10 specific graphics effects.

The first of these is a soft particle effect that removes the banding often found in particle effects like smoke, explosions, fire and debris - the effects simply didn't exist in the 3D world; instead, they were merely an add-on. With DirectX 10, the edges of the particle effects are much softer and banding is almost non-existent as the effects now interact with their 3D surroundings, as they're actually a part of the 3D world.

Additionally there are global cloud shadowing and volumetric lighting effects in the DirectX 10 version of the game. The latter is often referred to as 'god rays' and its implementation in World in Conflict interacts with the surroundings incredibly well. On the other hand, the former is where clouds cast shadows on the rest of the environment and, because all clouds in World in Conflict are volumetric and dynamic, the shadows cast by the clouds are rendered dynamically in DirectX 10 - they adjust in relation to the size, shape and orientation of the cloud in relation to the light source.

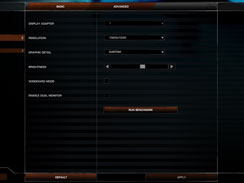

For our testing purposes, we used a full retail copy of the game and patched it to version 1.007, which includes a few fixes and some improved performance under DirectX 10. We used a manual run through from the Invasion level, which incorporates all of the effects we've discussed above. We chose not to use the built-in benchmark because it's largely CPU-limited. We used the "very high" preset, and controlled anti-aliasing and anisotropic filtering via the advanced settings tab.

Again, we see another impressive showing from the GeForce 9800 GX2 – it manages to eke out a 10 percent performance advantage over the GeForce 8800 GTS 512MB SLI configuration at both 1920x1200 2xAA 16xAF and 2560x1600 0xAA 16xAF with all of the in-game details turned up to the 'Very High' preset.

The ATI Radeon HD 3870 X2 showed the same strange happenings that we've seen before at 1680x1050, where performance is lower than at higher resolutions – it's a bug that we've reported to AMD, but the fix (if there is one yet) doesn't look to have been implemented. On the whole, the 3870 X2 limps through World in Conflict as the slowest card tested in the selection we've tested as part of this review and the worst of it is that it's slower than the now 10 month old GeForce 8800 Ultra and its single G80 GPU. Ouch.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.